Data Lakehouse

Data Lakehouse

Combining data lake and data warehouse

Stackable for Data Lakehouses

The Stackable Data Platform offers a comprehensive solution for implementing a Data Lakehouse architecture, seamlessly combining the flexibility and scalability of a data lake with the management capabilities and structured access of a data warehouse.

The Data Lakehouse

A data lakehouse represents an innovative approach to data architecture, merging the vast data storage capabilities of data lakes with the structured querying and data management features of data warehouses. With the Stackable Data Platform, organizations can leverage Kubernetes to deploy and scale their data lakehouse architecture, ensuring flexibility, efficiency, and high availability across their data ecosystems.

The data lakehouse architecture of the Stackable Data Platform is suitable for organizations that want to use data for digital transformation and improve business agility.

Highlighed data apps from the Stackable Data Platform for data lakehouses

Delta Lake

Facilitates ingestion, data flow management and automated data exchange between systems.

- Easy Data Routing and Transformation: Offers a user-friendly interface for data flow management, supporting rapid design and deployment of processing pipelines.

- System Integration: Connects to a variety of data sources and sinks, facilitating data ingestion from disparate systems.

- Data Lineage: Tracks data flow from source to destination, enhancing auditing and compliance.

- Flexibility: Customizable processors and the ability to handle various data formats and sizes.

Streamlining Data Workflows with precision and scalability.

- Advanced Workflow Orchestration: Apache Airflow provides comprehensive workflow planning and management that enables precise control of data processing tasks within the Stackable Data Platform.

- Dynamic Pipeline Creation: Easily define, schedule, and monitor complex data pipelines using Airflow’s intuitive UI and powerful programming framework. Customize your workflows to match your data processing needs perfectly.

- Scalable and Reliable: Airflow can be easily scaled. Simultaneous workflows can be handled effortlessly so that data tasks are executed reliably regardless of volume.

- Efficient monitoring and logging: Airflow’s monitoring functions enable quick identification and resolution of problems and ensure smooth data operation.

Offers powerful data processing capabilities, enabling distributed analytics and machine learning on large data sets.

- In-Memory Computing: Accelerates processing speeds by keeping data in RAM, significantly faster than disk-based alternatives.

- Advanced Analytics: Supports complex algorithms for machine learning, graph processing, and more.

- Fault Tolerance: Resilient distributed datasets (RDDs) provide fault tolerance through lineage information.

- Language Support: Offers APIs in Python, Java, Scala, and R, broadening its accessibility and usability.

Enables (virtualized) data access across different data sources and improves the flexibility and speed of queries in data architectures.

- Flexible Table Formats: Supporting Apache Iceberg and Delta Lake.

- Fast Query Processing: Engineered for high-speed data querying across distributed data sources.

- Federated Queries: Allows querying data from multiple sources, simplifying analytics across disparate data stores (data federation).

- Scalable and Flexible: Easily scales to accommodate large datasets and complex queries.

- User-Friendly: Supports SQL for querying, making it easily accessible to users familiar with relational databases.

Delta Lake

Stackable enhances its dedication to open and flexible data solutions by incorporating two premier table formats: Apache Iceberg and Delta Lake. Our customers can therefore choose the best option for their specific requirements. Below, we outline key factors to consider when choosing between Delta Lake and Apache Iceberg. However, we always recommend carrying out tests with real use cases in order to be able to make the most accurate decision possible.

Apache Iceberg:

- Ideal if particular emphasis is placed on user-friendliness and cross-platform compatibility.

- Supports continuous schema evolution and the analysis of large data periods.

- Its efficiency in query performance makes it a superior option for data warehousing needs.

Delta Lake:

- Perfectly aligns with users already utilizing Delta Lake architectures.

- Exceptionally suitable for setups that demand ACID transactions and strong data integrity.

- Unparalleled in environments where high data integrity is critical.

Integrating advanced data governance and fine-grained access control into the data lakehouse.

- Unified Policy Framework: OPA provides a powerful, unified framework for governing access across the entire data lakehouse, ensuring consistent enforcement of security policies, data privacy regulations, and compliance requirements.

- Declarative Policy as Code: Implement governance policies declaratively using Rego, OPA’s high-level language. This approach allows for the development of clear, understandable policies that can be version-controlled, reviewed, and deployed as part of your CI/CD pipeline.

- Fine-Grained Access Control: Achieve granular control over data access and manipulation within the data lakehouse architecture.

- Scalable and High-Performance: OPA also supports large, complex data environments and ensures that governance policies are evaluated efficiently without compromising access to data or application performance.

Exemplary Use Cases

Real-Time Decision Making: Make informed decisions instantly. Harness Apache Airflow to automate data pipelines, feeding fresh data. Combined with Trino for high-speed querying, this setup enables organizations to adapt swiftly to changing market conditions and operational demands.

Enhanced Customer Insights: Utilize Apache Spark for processing complex datasets and Trino for querying structured and unstructured data, supported by modern data formats like Apache Iceberg and Delta Lake. Apache Superset visualizes these insights, driving personalized customer experiences and strategic decision-making.

Innovative Product Development: Accelerate product innovation by utilizing the advanced analytical capabilities of Apache Spark and the seamless data querying of Trino across diverse data sources and formats. This fosters a culture of rapid experimentation and development. Also, Apache Airflow streamlines the pipeline from data collection to analysis, speeding up the iteration cycle for product development.

Supply Chain Optimization: Optimize your supply chain with predictive analytics derived from e.g. streaming data. Capture real-time events and process the data with Apache Spark. Use Trino to query it, enabling dynamic adjustments to enhance efficiency and reduce operational costs.

Social Media Monitoring and Analysis: Ingest social media data with Apache NiFi, process it with Apache Spark, and query using Trino to gain real-time insights into market trends, customer sentiment, and brand engagement. This strategic intelligence can than enhance content strategies and brand management decisions.

Showcase

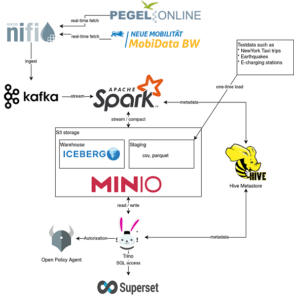

This demo of the Stackable Data Platform shows a data lakehouse.

It uses Apache Kafka®, Apache Nifi, Trino with Apache Iceberg and the Open Policy Agent.

Explore our blog post on how Stackable can be used to create a data lakehouse with

- dbt

- Trino

- Apache Iceberg

Unpack the simplicity of modern ELT/ETL within a streamlined data lakehouse architecture.

Our specialist for Data Lakehouses

Need more Info?

Contact Sönke Liebau to get in touch with us:

Sönke Liebau

CPO & Co-Founder of Stackable

Subscribe to our Newsletter

With the Stackable newsletter, you’ll always stay up to date on the latest from Stackable!

Newsletter

Subscribe to the newsletter

With the Stackable newsletter you’ll always be up to date when it comes to updates around Stackable!