Introduction

Apache Spark has come a long way since its inception in 2014, originally envisioned as a more generic model to work with data than the previous concept of Map & Reduce. It has been widely adopted since then and has sparked (pun intended) numerous subsequent and similar processing models like Apache Flink, Apache Kafka Streams and Apache Samza.

Arguably one of the success factors of Spark has been that it does not concern itself with resource allocation overly much, but instead relies on external systems for this. In early days this was usually YARN, which is part of the Apache Hadoop project, but in recent years the focus has shifted to using Kubernetes as the execution framework for Spark jobs. Along with this shift came the question of how best to start, manage and track these jobs that are running on Kubernetes.

The Google Spark on Kubernetes Operator was first released in 2017, although since the initial big push, activity on this operator has slowed and currently it is no longer being actively maintained. Since its inception it has been the de-facto operator for running Spark jobs on Kubernetes: until now!

First Impressions and Questions

At Stackable we looked at the Google operator as part of the Marispace-X initiative. For data processing purposes on this project a Spark on Kubernetes operator was required, although due to a few missing features (specifically: greater flexibility in managing and provisioning job dependencies) and the general lack of activity on the Google project we decided to roll our own instead.

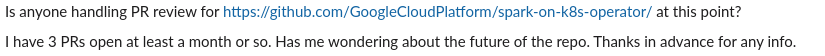

Recently someone apparently came across the same issue on the Data on Kubernetes Slack as indicated by the screenshots from different Slack communities below:

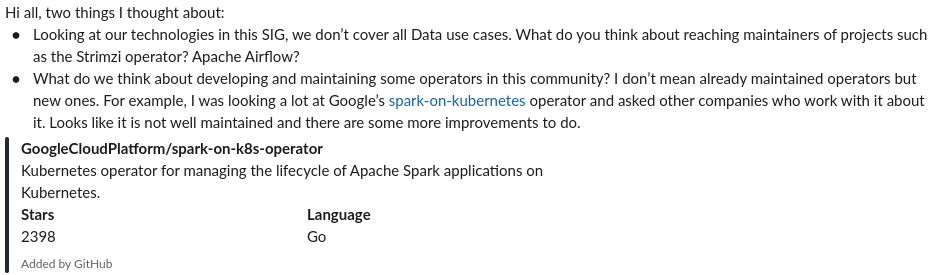

Additionally, there were messages from people working at Google that they were looking into options to revive development of the operator, however not much has happened since then. Looking at the commits over the last year paints a similar picture:

This prompted us at Stackable to sit down and draw up a quick comparison of the two operators.

Criteria

Before we get to the comparison summary, let’s try and summarize our motivation: what did we want our operator to offer? Much of this was summarized in a blog article we wrote at the time, but these are some things we have considered:

- Product support: new Spark versions and their components (e.g. Spark History Server) should be supported in a timely way

- Kubernetes support: users should be able to deploy the operator in a variety of environments: on-premise or in the cloud, open-source or enterprise

- Dependency management: there should be different ways to provision job dependencies, both static (baked into the Spark image) and dynamic (external S3 buckets, a secondary image, ConfigMaps)

- Logging: log output should be persisted and searchable

- Standalone or platform-integrated: users should have the freedom to use the operator either as a first-class component within the Stackable Data Platform (SDP), or as a largely standalone tool (the dependencies for the operator are the SDP secret- and commons-operators)

The Comparison

| Feature | Stackable spark-k8s-operator | Google spark-on-k8s-operator |

|---|---|---|

| Basic idea | Build and execute a spark-submit job | Build and execute a spark-submit job |

| Support | – active community & commercial support – 19 open issues (53 closed) – project start: April 2022 | – not officially supported by Google – 400+ open issues (570+ closed) – project start: November 2017 |

| Releases | – 6 releases since August 2022 | – one release since October 2022 |

| Supported Kubernetes versions | 1.24, 1.25, 1.26 | Unclear, but seems to support up to 1.26 and 1.27 |

| Supported OpenShift versions | Certified for 4.10, 4.11, 4.12 & 4.13 in the next release | Does not seem to be supported for 4.9+. Not certified. |

| Supported Base Spark versions | – – 3.3.0 – 3.4.0 – all with integrated python 3.9 or 3.11 | – 2.4.0 – 2.4.4 – 2.4.5 – 3.0.0 – 3.1.1 – See here. No mention of python/scala versions. |

| Operating systems | Linux, multi-arch in the works | Not specified (seems to be just Linux) |

| Scheduling | None in-built, but integrates with Airflow | – Provides cron scheduling – Support for Volcano |

| Restarts | None in-built, but can be achieved with Airflow and custom scripts | – automatic application re-submission for updated SparkApplication objects – automatic application restart with a configurable restart policy – automatic retries of failed submissions with optional linear back-off |

| Deployment | Helm/stackabelctl, OLM | Helm |

| Pod customization (volume mounts, affinities etc.) | Via pod templating | Via mutating webhooks |

| Images | – one image used for job/driver/executor – can be custom, self-hosted, or stackable-provided – secondary image for resource loading | – can have separate images for driver, executor and init |

| Provisioning of external resources | – provided in the base image – secondary resources image – S3 access – ConfigMaps | – provided in the image – standard spark-provisioning via s3/hdfs conf settings |

| Working with other products | Full integration with all products in SDP: Discovery mechanisms, demos etc. | No native support |

| History Server | Supported – TLS – Custom Certificates – re-usable connection/bucket references | Not supported directly |

| LDAP Integration | Supported | Does not seem to be supported |

| CRD Versioning | Planned | Unclear |

| Metrics | Built-in Promotheus support | Built-in Promotheus support |

| Logging | Supported – Logging aggregation and persistence with pluggable sinks | Not supported – workarounds are possible |

| Volumes | Supported | Supported |

| Secrets | Backed by secret-operator – secret search across namespaces – easy creation of custom truststore – etc. | Standard k8s mechanisms |

| Service Account | – built-in but override-able (pod overrides) | – declared via CRD |

| Kerberos | Not supported (yet) | Not supported |

| Hadoop configuration | Not supported directly | Supported |

| Deploy mode | Cluster | Cluster, client, in-cluster |

| Benchmarking, performance recommendations | Benchmarks in progress in the Marispace-X project | Nothing documented |

| SparkApplication creation | – kubectl | – kubectl – sparkctl |

| Configuration – fairly similar – partly as we based the scope of our CRD on the one from Google 🙂 | Via CRD – volumes – affinities – resource settings – packages, repos, etc. | Via CRD – volumes – affinities – resource settings – packages, repos, etc. |

| 10 open issues sorted by thumbs-up: of those in the google list: – 7 are already available in SDP – 1 is planned | – Add support for Volcano type/feature-improvement (in Google) | – Move away from the webhook for driver/executor pod configurationsenhancement – in favour of pod templates (in SDP) – Updating to Spark 3.2.0 (in SDP) – Spark Thrift Server(STS) CRD – Update k8s-api to v1.21 (to support ephemeral volumes) (in SDP) – Helm Upgrade Causes the Spark Operator To Stop Working – Add support for arm64 (planned for SDP) – Airflow Integration (in SDP) – Could we reconsider involved the spark history server in this repo? (in SDP) – Support spark 3.4.0 (in SDP) – Support setting driver/executor memory and memoryLimit separately (in SDP) |

| License | OSL-3.0 | Apache-2.0 |

Hopefully the table gives a fair and unbiased overview of the main differences between the two operators, contact us if we’re missing anything.

It should be clear that the main benefits of the Stackable operator come down to it being tightly integrated into our Stackable Data Platform and that it’s actively maintained, gets regular updates and has a team behind it focused on enterprise readiness. It supports more recent Spark versions and sees more updates. This is also fairly obvious from the open issues on the Google operator, where a lot of issues can be found around supporting newer Spark versions, CVEs being raised against the images and the like.

In a professional context, the fact that Stackable does offer commercial support for the operator often turns out to be the most relevant factor. And this is not limited to having someone to call and ask for help: other factors like CVE-evaluation and regular security updates become ever more important here.

Also, the Stackable operator is certified on OpenShift, which, even if we say so ourselves, is no small feat!

Google’s operator on the other hand racks up points in the maturity and feature-richness categories, supporting scheduled jobs, automatic restarts (particularly useful for spark streaming jobs), Volcano and Hadoop configuration out of the box.

We started this evaluation as an internal project to see which areas we need to focus on to catch up. The biggest ask from the community being better scheduling support via Volcano, YuniKorn or Kueue which we are already tracking.

Summing It Up

As usual, there is no “winner”, but your own use-case may cause you to come down on the side of one of the operators. At Stackable we are committed to the continued development of our operator, which means supporting new Spark and Kubernetes versions, ensuring OpenShift compatibility as well as making sure it works seamlessly together with the rest of the Stackable data platform components like Hadoop, Hive Metastore and HBase.

We’d love your input and feedback. You can find us in the #spark-operator channel on the Kubernetes Slack, on our community Discord or on GitHub Discussions.