TL;DR

At Stackable, we’re always exploring innovative ways to make our technical content more accessible and engaging. Recently, we experimented with GenAI-driven content creation by turning our technical documentation into a podcast.

The best part? The entire process – from scraping the docs to generating the final podcast – took less than an hour! Here’s a breakdown of how we did it. Or just listen to the final – surprisingly acceptable result 📳:

1) Downloading Our Tech Docs from GitHub

Our technical documentation is hosted on GitHub and built using Antora, a powerful tool designed for managing documentation sites. Antora stores our docs in .adoc (AsciiDoc) format, making it easy to organize and manipulate. We downloaded the content from our Tech Docs GitHub repository to begin the process of transforming it into a podcast.

2) Combining AsciiDoc Files into One

After downloading the .adoc files, we needed to combine them into a single comprehensive document for easier handling. To simplify this task, we leveraged OpenAI to generate a Python script that automated the process of merging all .adoc files. This allowed us to efficiently gather and prepare the content for the next steps.

While originally being a short back and forth dialogue with ChatGPT, we basically came up with sth. similar to this prompt for the code generation:

„Write a Python script that combines all .adoc files from a directory into a single output file. The script should accept two command-line arguments: the input directory and the output file. For each .adoc file, add a header in the output file with the file name. After that, append the content of each .adoc file, separated by two new lines.“

And here’s the GenAI-generated Python code we finally used, setting the stage for podcast generation. Good enough for a one time experiment…

import argparse

from pathlib import Path

def combine_adoc_files(input_dir, output_file):

input_dir = Path(input_dir)

with open(output_file, 'w', encoding='utf-8') as outfile:

for file_path in input_dir.rglob('*.adoc'):

outfile.write(f"--- File: {file_path} ---\n")

with open(file_path, 'r', encoding='utf-8') as infile:

outfile.write(infile.read())

outfile.write("\n\n")

def main():

parser = argparse.ArgumentParser(description='Combine all AsciiDoc (.adoc) files from a directory into a single file.')

parser.add_argument('input_dir', type=str, help='The directory to search for AsciiDoc files.')

parser.add_argument('output_file', type=str, help='The file to write combined AsciiDoc content to.')

args = parser.parse_args()

combine_adoc_files(args.input_dir, args.output_file)

if __name__ == '__main__':

main()3) Using Google’s NotebookLM to Create the Podcast

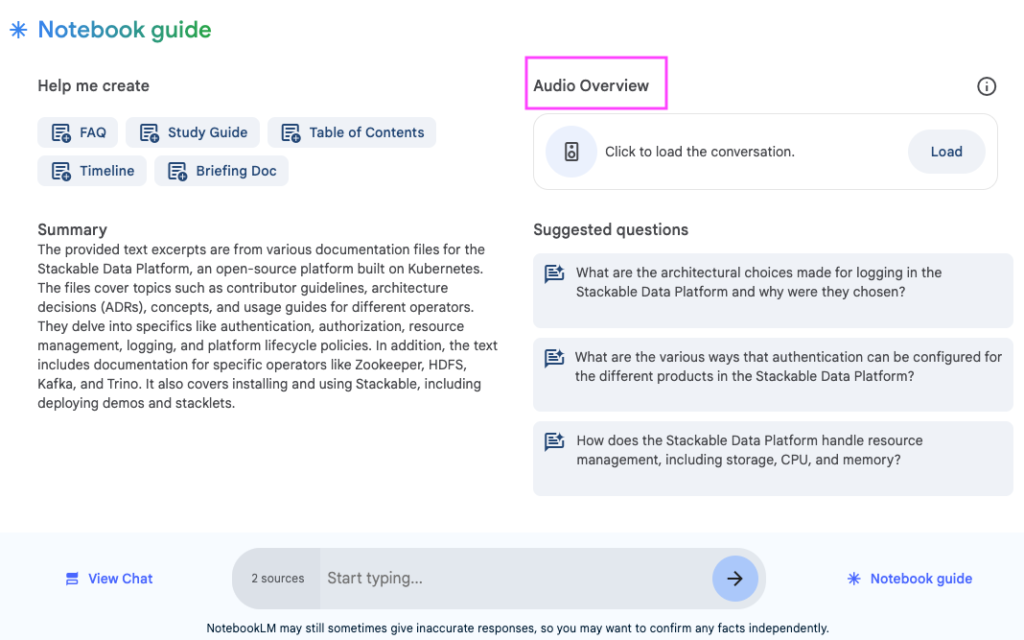

Once we had a single combined document, we used Google’s NotebookLM, an AI tool designed to convert large inputs into summaries – and podcasts. By feeding the AsciiDoc content into NotebookLM and using its „Audio Overview“ feature, we quickly created a 15-minute podcast summarizing key technical concepts from our documentation – all in less than an hour from start to finish!

Conclusion: When GenAI Meets Data, Quality is Still King

This experiment demonstrated how fast today’s GenAI can streamline content creation. In less than an hour, we went from downloading technical documentation to producing a podcast of at least semi-professional quality. But whether we use commercial tools like NotebookLM or open source alternatives, it’s the quality of the content that makes the difference.

At Stackable, as we continue to explore the GenAI landscape, both open source and commercial, one thing remains constant: high quality data is still king. And even in an enterprise context, a robust data platform like our Stackable Data Platform ensures that its content can be effectively leveraged with innovative GenAI tools. GenAI may be the future, but data backed by a solid platform is as important as ever.

Epilog: Why did We Use Commercial tools?

As an open source company, we usually focus on open source tools. For this project, however, we wanted to move quickly and also test Google’s new GenAI service. While it was convenient, the entire process could be replicated using open source alternatives:

- OpenAI alternative: Today, there are many alternatives to OpenAI. A popular one is Meta’s Llama 3.1/.2 model, which can be run locally but is not made available under an open source license. A few models, however, are available under open source licenses. Falcon-40B-Instruct from Technology Innovation Institute (TII) is using the Apache 2.0 license. Read an intro about this model here. Any of these models can be used to generate the podcast dialog, providing a flexible and open source-like solution.

- Text-to-Speech: Mozilla DeepSpeech or Coqui TTS are excellent open source text-to-speech options that could potentially provide similar results for podcast creation without the need for proprietary software.